Write a surrealist poem, a dissertation on the industrial revolution, a debate on censorship… ChatGPT, OpenAI’s new conversational robot knows how to write everything, just ask it. Launched a few days ago, this tool uses artificial intelligence to automatically write texts on the requested subject. And he is making a strong impression on the sector. In less than a week, more than a million experts, researchers but also ordinary Internet usershave enthusiastically tested the possibilities of the tool and published on social networks the strikingly coherent texts that it has proposed to them.

Here is, for example, what ChatGPT answers to Eric Jang, vice-president in charge of AI at Halodi Robotics, when he questions him about certain principles of thermodynamics.

There, what ChatGPT writes when Corry Wang, senior analyst at Google, asks him to write four paragraphs of essay comparing the theories of Benedict Anderson and Ernest Gellner on nationalism.

Here again, what ChatGPT proposes to Marc Andreessen (one of the fathers of the Web and co-founder of the Andreessen Horowitz investment fund) when the latter asks him to write a play, featuring a journalist from the New York Times and a Silicon Valley entrepreneur debating free speech.

“The AI is getting really good”, Hail Elon Musk on Twitter (who participated in founding the non-profit collective OpenAI but has not held a position there since 2018). This progress is very encouraging news. However, they pose dizzying new challenges that need to be addressed now.

The time of home editorials behind us?

Take the case of AIs that generate text. They save valuable time for an Internet user seeking information on a subject. Instead of sending him lists of Web pages on it, they offer him, like a zealous secretary, a digest that is pleasant to read (Google has an interest in quickly adapting to this new deal). And if these AIs do not generate literary masterpieces, they make writing basic texts (administrative mail, website FAQs, etc.) instantaneous.

This is even truer for AIs that generate computer language, mysterious to the layman but actually less complex than natural language. “AI will save coders a lot of time,” Thomas Dohmke, CEO of the giant software development platform GitHub, told us in October.

The consistency of ChatGPT’s texts, however, is enough to give school teachers cold sweats. They were already struggling to fight against the “copy-pasted” of Wikipedia. AI-powered cheating will be much harder to detect, as students will get “new” results with every click. And even if these digital intelligences are not overflowing with originality (and sometimes crash completely, in particular on recent events), certain texts created automatically are of a better level than the copies of less diligent pupils. The time for home essays may be behind us.

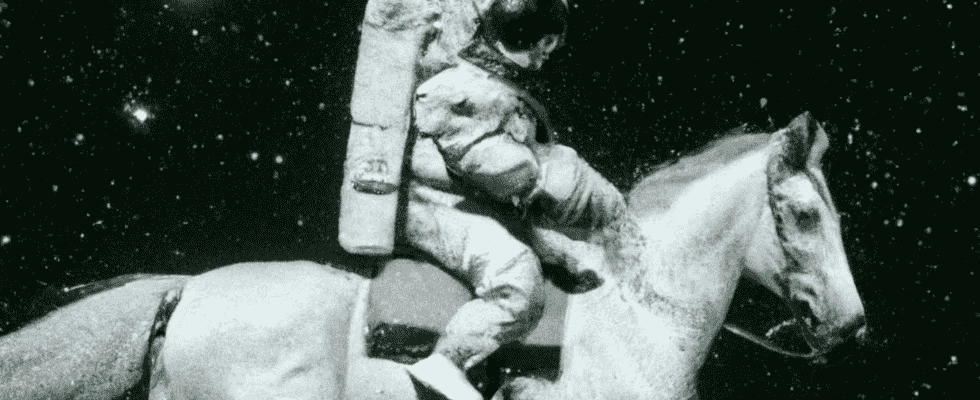

AIs capable of generating images at will are also upsetting the balance in place. With them, Everyman can dream of being an artist and create sophisticated illustrations on the subjects of his choice: he just has to describe in a few words what he wants to draw, possibly specifying in what aesthetic genre: an astronaut riding a unicorn in space, a luxuriant jungle in the pointillist style, an extraterrestrial city in a universe inspired by Jules Verne…

A revolution for low-budget organizations who will finally have access to quality visuals and for professionals in the sector who will be able to devote more time to other creative stages (designing a universe, taking care of details, etc.). But all this will not happen without a sudden transition in employment in this sector. Companies will certainly switch to these tools for their most basic needs (brochure visuals, etc.). And even if these creations are not the most exhilarating, they allow many artists to make ends meet.

Violent images generated by AI

We must also look into the malicious uses of these generative AIs. Being able to depict nudes or violence is nothing but very normal artistically. Some AI allow it. However, these tools can be misused, for example to create fake nudes of real people without their permission. In September, Democrat Anna Eshoo alerted American regulators to the fact that Internet users were using these tools to produce images of “violently beaten Asian women”.

The most complex in the story is still perhaps the legal headache that these tools represent. Can we attach copyright to images or texts created with the help of these artificial intelligences? For people who just want to create a fun meme or a mundane presentation, the answer doesn’t really matter, but for artists whose work relies on these tools, the debate is crucial.

And this is not the only subject to give headaches to lawyers specializing in digital. Because these AIs capable of “creating” texts and images, did not acquire this faculty by magic. They have been fed by huge databases of texts and images, from which they draw meaningful patterns that they use to respond to our requests. However, a large part of these texts and images are works subject to copyright.

The burning question to be decided is therefore whether by training on them, these AIs infringe the copyrights of these artists. Some have already been alarmed to see their paw reproduced on the chain by these programs. And there are strong from that at Microsoft, Adobe and the myriad of start-ups specializing in generative AI, battalions of lawyers are preparing their weapons for this battle. It’s hard to say who will win. One thing is certain, the boundaries of creation have begun to shift.