Nvidia is cutting the road. In parallel with the announcement of its new Drive Hyperion 9 platform, which is based on its Atlan SoCs, and which will take place in cars by 2026, the Californian company took advantage of its GTC 2022 to unveil Drive Map.

By 2024, this scalable map system will cover no less than 500,000 km of roads around the world, mainly in North America, Europe and Asia. Nvidia says Drive Map, which will be available to the entire automotive industry, will provide verified map coverage that will be continuously updated and expanded through millions of vehicles.

Also see video:

HD cartography enriched and controlled thanks to AI

To establish the most precise mapping possible, Nvidia uses the know-how of DeepMap, a start-up specializing in high-definition mapping of environments acquired in June 2021 by Jensen Huang’s company. Drive Map then combines the reliability of these HD maps with an approach, which is based on a cartography combining crowdsourcing and artificial intelligence.

The DeepMap base serves as a “verified base”, while millions of connected and circulating vehicles continuously contribute new information, which enriches the initial information after being aggregated using algorithms.

From there, Nvidia expands its concept of a digital twin to a staggering scale, that of Earth. The term double is not usurped, since the Santa Clara company announces an accuracy of around five centimeters for its cards.

If they will be used in real life, they will also be at the very beginning of the chain that presides over the destiny of self-driving cars: training the neural networks, testing and finally validating the models.

This virtual world, which is based on the technology of Omniverse, Nvidia, will therefore be used for training but also for monitoring fleets of autonomous vehicles, if necessary, in the event of the need for technical assistance.

Three cards in one, three levels of redundancy

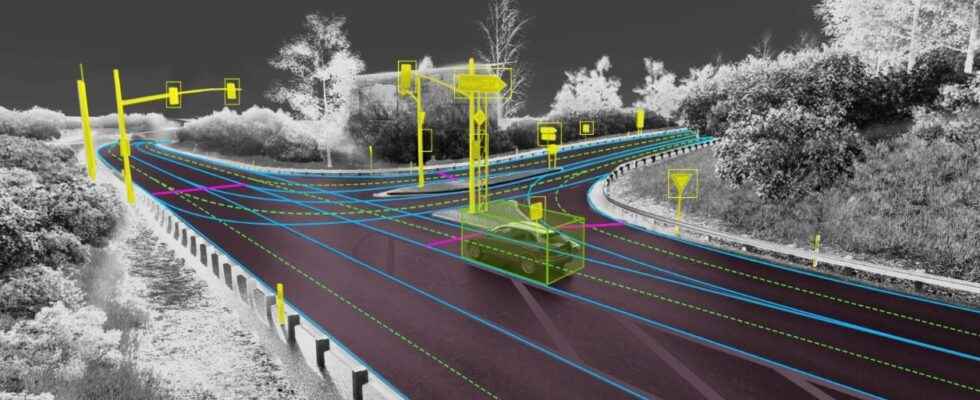

Both to enrich but also to use this mapping system, Drive Map superimposes three localization layers, obtained and used by three types of devices: camera, lidar, and radar.

The artificial intelligence driver will be able to use each of these levels and tools independently, in order to ensure a diversity of means of localization and the redundancy required for the safety of cars.

Thus, the camera will provide him with indications on the markings on the ground, the separators and edges of the lanes, the traffic lights, signs and posts.

Next, the AI will aggregate a point cloud of radar returns. A technical solution that is particularly useful when the light is low, and the cameras are struggling, or when the weather conditions are bad, which is then a challenge for both the lidar and the cameras.

According to Nvidia, the radar can also be useful in environments that are not mapped, the driver AI can then find its way to surrounding objects.

Finally, the lidar provides an even more precise and reliable layer of the environment in which the autonomous vehicle evolves, since it creates a 3D representation, precise to five centimeters.

Once it knows where it is, the artificial intelligence can plan its movements and drive the vehicle as safely as possible. Drive Map is of course at the heart of the Drive Hyperion 8 platform, and its newly unveiled successor Hyperion 9. Two approaches that promise Level 4 autonomy eventually, although the second will only be rolled out from 2026. .

Source: Nvidia Blog