Combining multiple photos becomes child’s play with Adobe Project Perfect Blend, which has been officially unveiled.

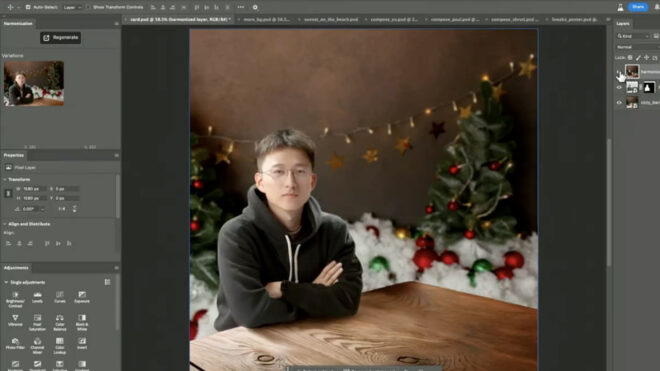

On display at the 2024 Max event Adobe Project Perfect Blendcombines two different photographs and does this in the most realistic way possible. how it works Here or the prototype system you can see in the X video just below, First, it analyzes the opened image and the photo of a person to be placed on the image. Then, the two images are brought together and all light, color and shadow settings of the person added to the main image are made automatically with the support of productive artificial intelligence. It is not yet announced when the system, which offers very realistic results, will be put into use. It is thought to provide even better results when it comes into general use. Project Perfect Blend, It is certainly among the most impressive systems shown at the Max event.

Excuse me what?! #ProjectPerfectBlend needs to come to Photoshop, like, now! 🤯 pic.twitter.com/OJQcZhm34S

— Howard Pinsky (@Pinsky) October 16, 2024

YOU MAY BE INTERESTED IN

Adobe recently introduced other projects. For example, loud SuperSonic project uses artificial intelligence to create sound effects for your videos. is using. This system produces sound effects from what is written, and a scene detection system is used here. In this way, production can be done by selecting certain parts of the video frame.. The system understands what the object you choose is and automatically produces sound effects for you. The system, which is not yet known when it will be available and creates the impression that it will save a lot of time when it is released. It also offers alternatives for the content produced. The system can also use your voice. For example, if you voice a monster in an animation, the system takes your recording and can make it realistic to fit a monster. What exactly Super Sonic can do here shown in the video:

The company announced important features for Premiere and Photoshop as part of the Adobe Max conference. was announced. Professional video editing software Adobe Premiere, ““Firefly Video” is strengthened by the model. This is the first of several features to be made available in beta on this basis for the software today. “Generative Extend” happened. This feature can extend the duration of selected video clips by up to 2 seconds and It offers this by getting help from productive artificial intelligence, that is, by producing. In addition to this opportunity, which is nice for solving small errors, ““Text-to-Video” And “Image-to-Video” The vehicles also arrived. Thanks to Text-to-Video, Premiere can now generate videos from text like OpenAI’s Sora.

Image-to-Video, on the other hand, can analyze uploaded videos and make changes to them. In this way, the need to re-record the video for errors can be completely eliminated in some cases. Of course, this infrastructure has its limits. Currently, only five-second videos can be created at 720p 24 fps. Stating that production takes 90 seconds, Adobe is also introducing new productive artificial intelligence features for Photoshop. Photoshop, which has become a tool that automatically selects and removes distracting elements from photos, is widely used today.Generative Fill, Generative Expand, Generate Similar and Generate Background” It is now switching to the Firefly Image 3 model, which offers better results in its vehicles. Adobe, which also brought the Generative Expand feature to InDesign, continues to develop its Creative Cloud family.