Published on

Updated

Reading 2 min.

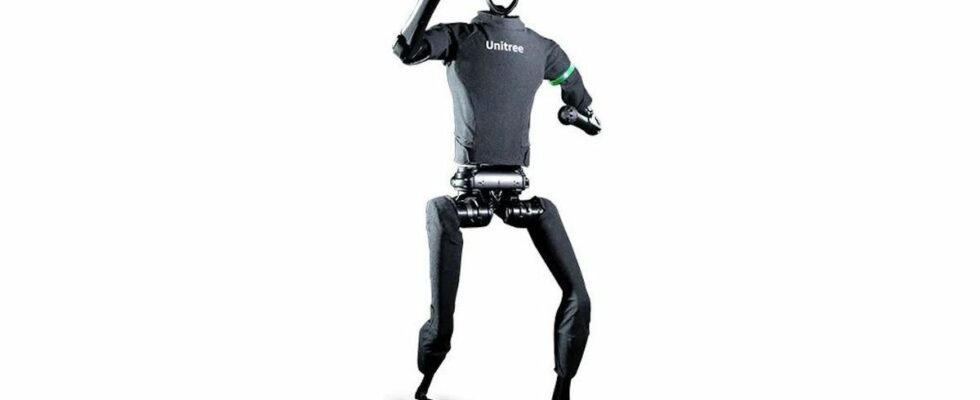

When we think of robots, images of cold, rigid machines often come to mind. However, according to a US study, teaching humanoid robots to imitate human movements, including dancing, could facilitate their integration into society and improve their cooperation with humans.

A studyled by the University of California, San Diego, has demonstrated that robots can learn more expressive and human-like gestures thanks to an innovative motion capture technique: “Expressive Whole-Body Control” (ExBody). This advance aims to strengthen trust between robots and humans by making the former friendlier and more collaborative.

San Diego Engineers managed to teach their robot complex movements such as dancing, shaking hands, holding a door or even hugging. Their method relies on a vast collection of motion capture data and dance videos.

“Through expressive and more human-like body movements, we want to build trust and show that robots can coexist in harmony with humans.” said Xiaolong Wang, co-author of the study, in a statement. “We are working to help change the public’s perception of robots, seeing them as friendly and collaborative rather than terrifying like the Terminator.” he adds.

After analyzing 10,000 hand position points and 10,000 trajectories across 4,096 simulated environments, the team developed a coordinated policy that allows the robot to perform complex gestures while simultaneously walking on varied surfaces such as gravel, dirt, grass and inclined concrete paths.

Concrete tests have already shown the effectiveness of humanoid robots in collaborative tasks with humans. However, thanks to this new learning technique, robots have been used to regulate car traffic, demonstrating their ability to manage dynamic and complex situations. In addition, these robots have also been tested as basketball referees, where they were able to signal fouls and interact with players smoothly and precisely.

Currently, robot movements are controlled by a human operator using a gamepad, but the team is considering a future version equipped with a camera, allowing the robot to perform tasks and navigate autonomously.

“By extending the capabilities of the upper body, we can expand the range of movements and gestures that the robot can perform“, concludes Wang.