The current champion of chips dedicated to artificial intelligence made several announcements during Computex 2024. One of the most interesting concerns a technology allowing the creation of “digital humans”.

The technology giant Nvidia has established itself as the undisputed champion in the field of chips dedicated to artificial intelligence. Its electronic circuits equip the vast majority of servers and data centers used to train and execute AI models. But if the company is so powerful in this sector, it is not only thanks to its hardware components, but also to its vast ecosystem of software development tools.

Among the general public and gamers, its best-known AI technology is undoubtedly DLSS, a technique for improving and accelerating graphics rendering in video games. However, the company does not intend to limit itself to the video game sector and wants to conquer much larger parts of the industry and services with its AI products. And one of the latest, announced at Computex 2024, is as fascinating as it is disturbing.

Nvidia ACE: digital humans powered by artificial intelligence

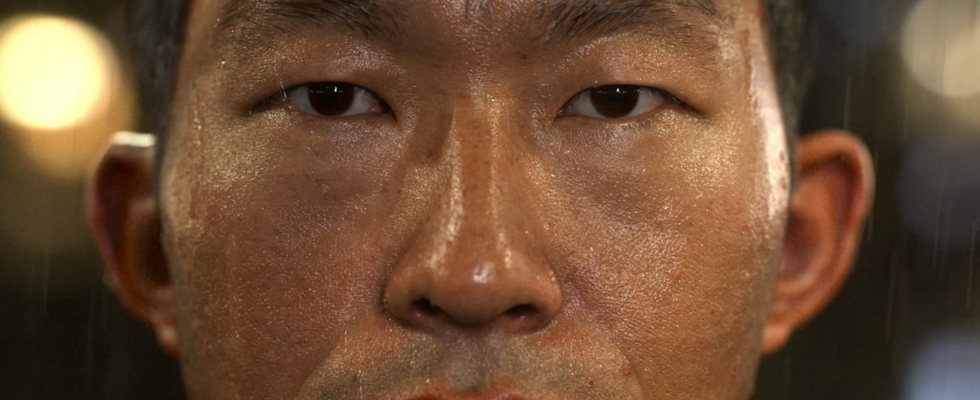

Of the “digital human microservices”, it is under this name that the company led by Jensen Huang presents its Nvidia ACE technology, an acronym for Avatar Cloud Engine. Behind this name hides a suite of software tools capable of animating virtual characters, endowing them with astonishing capabilities: recognition of text, voice and images, understanding of natural language, generation of text and voice responses, animation face and lip sync of 3D models, all in real time.

These technological feats are enabled by the conjunction of the computing power of Nvidia RTX chips on the one hand, and by the emergence of Large Language Models on the other. Nvidia ACE technology is not entirely new and had already been shown a few months ago, and the company presented it as a solution to make non-player characters more interactive and more alive in video games. But what Nvidia is now offering seems to go much further.

In its Computex 2024 press releasethe company announces in fact “general availability of NVIDIA ACE generative AI microservices to accelerate the next wave of digital humans” and explicitly cites three industries in particular that should benefit from these tools, video games obviously, but also customer service and the health sector.

Nvidia ACE’s proposition for these industries is simple: create true “digital humans”, equipped with a 3D visual representation and a voice, capable of interacting naturally and in real time with customers and customers. patients. Enough to instantly make obsolete the capricious conversational robots that we are regularly confronted with on websites and voicemail boxes, as ChatGPT did in 2022 but at an even higher level.

To bring these new kind of virtual characters to life, Nvidia ACE consists of a suite of technologies, some of which are already available and others should be available soon:

- NVIDIA Riva ASR, TTS and NMT — for automatic speech recognition, text-to-speech conversion and translation

- NVIDIA Nemotron LLM — for language understanding and contextual response generation

- NVIDIA Audio2Face — for realistic facial animation based on audio tracks

- NVIDIA Omniverse RTX — for realistic skin and hair traced in real time

- NVIDIA Audio2Gesture — to generate body gestures based on audio tracks (not yet available)

- NVIDIA Nemotron-3 4.5B — a new small language model (SLM) specifically designed for low-latency local execution

Until now, this set of technologies and software tools was only accessible via powerful data centers, which restricted its use to large companies and required the use of servers to run “digital humans” services. . And what Nvidia has just announced at Computex 2024 is the arrival of the Nvidia ACE suite directly on devices equipped with an RTX circuit, whether for the creation of virtual characters on the developers’ side or their execution of that of the users. The number of PCs equipped with RTX graphics cards is very large, so we can easily imagine the potential of this technology for the aforementioned sectors of customer service and health: technical assistance, after-sales service, telemedicine, sports coaching, the possibilities seem indeed numerous and promising.

But we can also now see the harmful effects and misuses that the large-scale availability of this technology will inevitably bring about. There is no doubt that scammers of all kinds will not hesitate to invest in a few RTX GPUs in order to set up new, ever better-crafted scams, that certain activists and pressure groups will devote themselves to creating even more realistic fake videos, and that fans of the genre will use it to create ever more advanced pornographic fakes. Not to mention the potential impact on the employment of many people in care and service professions, already heavily affected by the surge in conversational AI like ChatGPT.