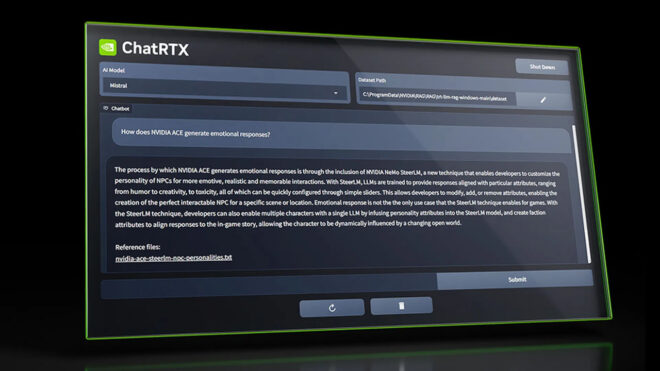

Continuing to be the biggest name in the graphics card market Nvidia, Chat with RTX or with its new name ChatRTX continues to update its system.

Nvidia developed by ChatRTX contacts with They can run the large language models behind systems such as LLM, or ChatGPT, on their own PCs. The system offers a user experience that does not depend on local, that is, remote servers, In addition to the RTX 40 series, it also supports RTX 30 series graphics cards.. The infrastructure, where 8 GB VRAM is sufficient to use, is quite well found in Meta. Llama 2 can also run its LLM onboard. The application (downloading), which takes up between 50 GB and 100 GB depending on the model chosen, does not offer an experience as advanced as ChatGPT or Google Gemini (Formerly known as Bard), but due to its offline structure it can be used for many different operations. becomes a safer option. You can watch the promotional video right below. ChatRTXcan generate answers by analyzing entered text files, or can quickly answer questions asked about the video by analyzing linked YouTube videos. The system that manages to attract attention and reveals great potential from here can be accessed, with the update, the system It is also reported that it has started to support the Google signed Gemma model.. The system also started to support ChatGLM3 and OpenAI’s CLIP models. It now also offers voice input support to users. To top it all off, Nvidia is launching an AI speech recognition system that lets you search through data using your voice. It has also integrated Whisper into the system.