OpenAI, the creator of ChatGPT and DALL-E, never stops offering original tools using artificial intelligence! Point-E, the latest, allows you to create 3D objects from textual indications. Another revolution?

OpenAI never stops revolutionizing the world of artificial intelligence! After DALL-E, the image-from-text generator, and ChatGPT, the robot capable of holding conversations, answering questions and producing texts on all kinds of subjects with an almost human style and naturalness, the American company has just presented on December 20, 2022 a new tool as original and impressive as it is creative. baptized Point, it aims to generate 3D images – three dimensions – in color using simple textual indications. If it is not the best software that exists in terms of visual rendering, it is by far the fastest to date. And, above all, it is very easy to use. A great step forward for generative artificial intelligence systems, which are definitely very successful at the moment. Point-E models and code are available on the Github repository of OpenAI, and everyone can consult the contents of the project since it is in open-source.

Dot-E: two AIs for the price of one

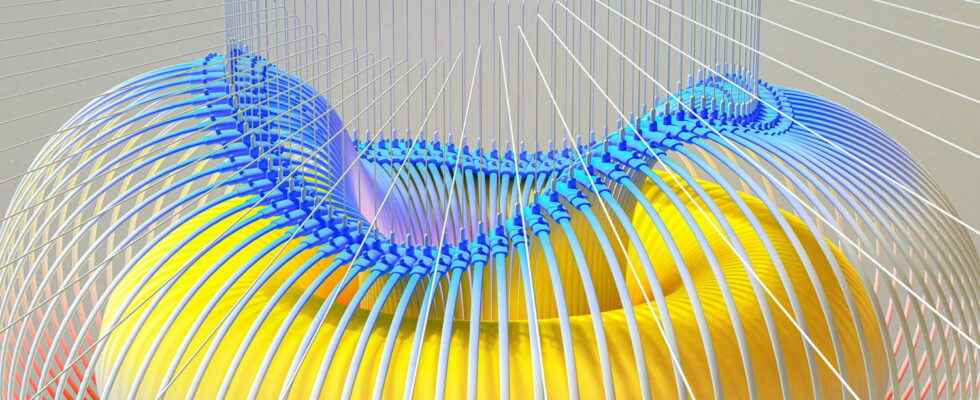

Normally, producing a 3D computer-generated image requires significant computing power and long hours, whereas Point-E is able to do it in one or two minutes with a single GPU (graphics processing unit). The process is quite simple. The user enters a sentence describing the desired object, and the artificial intelligence produces a synthetic view via a cloud of points, which gives the overall shape of the model. “Our method first generates a single synthetic view using a text-image diffusion model, then produces a 3D point cloud using a second diffusion model that conditions the generated image”, explains OpenAI. To put it more simply, Point-E does not use one but two AIs: one that translates the text into an image – like DALL-E or Stable Diffusion – and a second that translates the image into a 3D model.

Point-E was trained thanks to the deep learning by relying on many text/image pairs to associate words with visual concepts – which is quite smart since there are so many more – and then on image/3D model pairs, in order to learn to translate data. A real innovation! And as much to say that the generation of 3D image in a few minutes could interest many sectors.

Point-E: weaknesses to correct and limits to set

Revolutionary as it is, Point-E is far from perfect. Indeed, while point clouds are easy to synthesize, they do not capture the shape or texture of the object. In order to circumvent this limitation, artificial intelligence is trained to convert point clouds into a mesh – a geometric data structure that represents the vertices, edges and faces defining the object using a set of polygons. But the AI isn’t quite there yet and sometimes it misses some parts of the 3D model. Another problem with its use: the 3D images generated by Point-E can make it possible to manufacture very real objects with a 3D printer. If this offers many possibilities, drifts are possible, such as the manufacture of weapons, which would then become easily accessible to the general public.

The possibilities offered by Point-E should be of interest to many sectors, since 3D models are widely used in film, television, interior design, architecture and industry – for the creation of vehicles, appliances or structures for example. OpenAI hopes its AI “could serve as a starting point for further work in the field of 3D synthesis”. But the company is not the only one on the spot. Earlier in the year, Google unveiled DreamFusion, while Epic Games designed an application to generate a 3D object using photos taken with a smartphone.